Profiling and improving load times of static assets

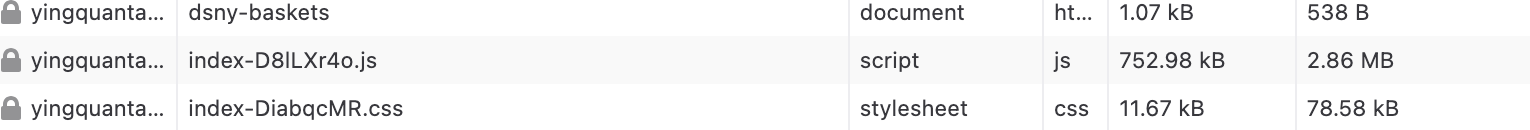

In my project on visualizing waste basket locations in NYC, I had been importing csv files in javascript, which was converted to modules through d3-dsv. While these typed imports, together with manually provided type definitions allow us to work with more confidence when these modules are imported, they come at the cost of large module sizes:

Note that the javscript asset is 2MB, uncompressed.

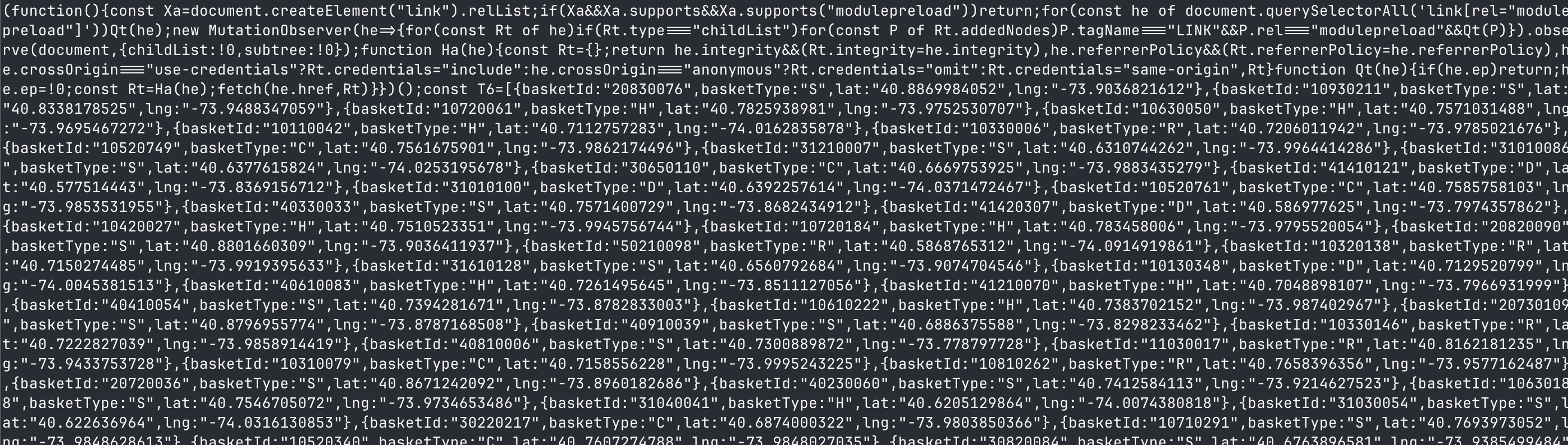

This is because the csv is serialized into objects, which then get packaged with the module. Looking into this js asset, we see that the object is serialized like so.

Can we improve this? Let’s see.

Downloading csvs as a separate asset

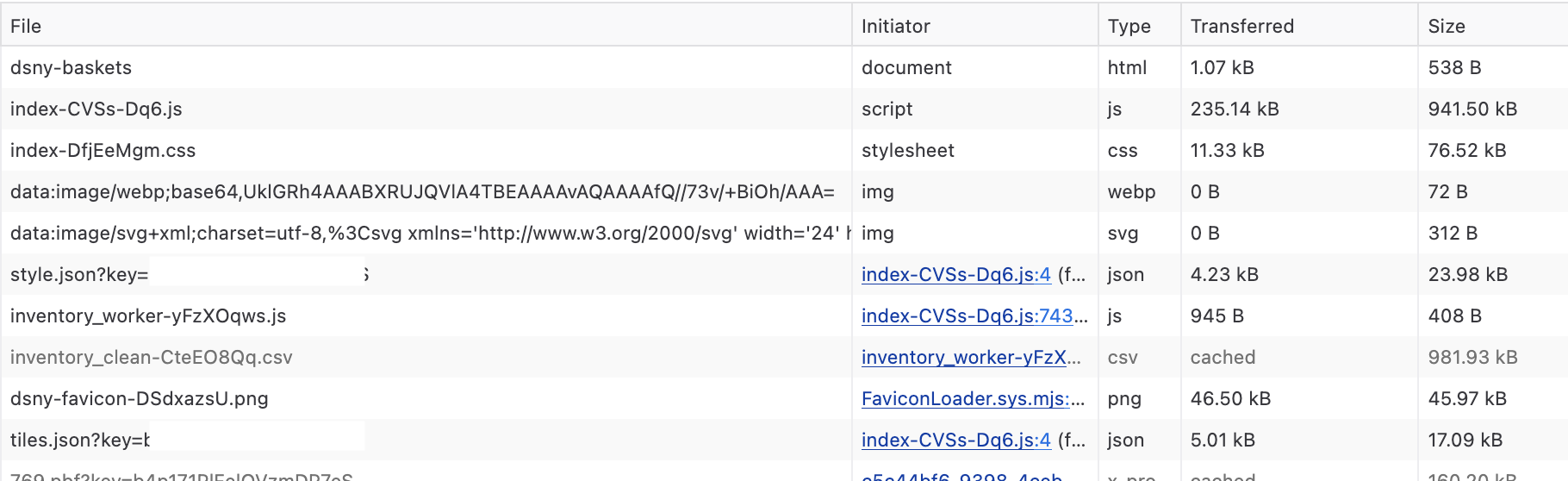

The first thing I tried was to not parse the csv file into a module, but to fetch and parse it at runtime.

This results in much better file sizes. Additionally, I also split out the parsing of the csv into a worker thread so as to not block the main thread. However, upon some profiling, I realized that this parse was happening very quickly(for a dataset of 20k points), the complication of spinning up a web workers was not really worth it. The webworker code however, was simple enough.

|

|

This means that the worker will execute immediately upon load, downloading the csv asset and parsing it, posting a message to the main process when done. The response was wrapped in a promise in main.ts so that the rest of the script can continue executing while the csv loads and parses. I think this is the right approach when data sizes get really large, but I think that threshold is going to be project dependent.

At this point I also started asking myself if the download time of the js asset is really the most important factor. After all, after compression, the js + data asset is under 1MB.

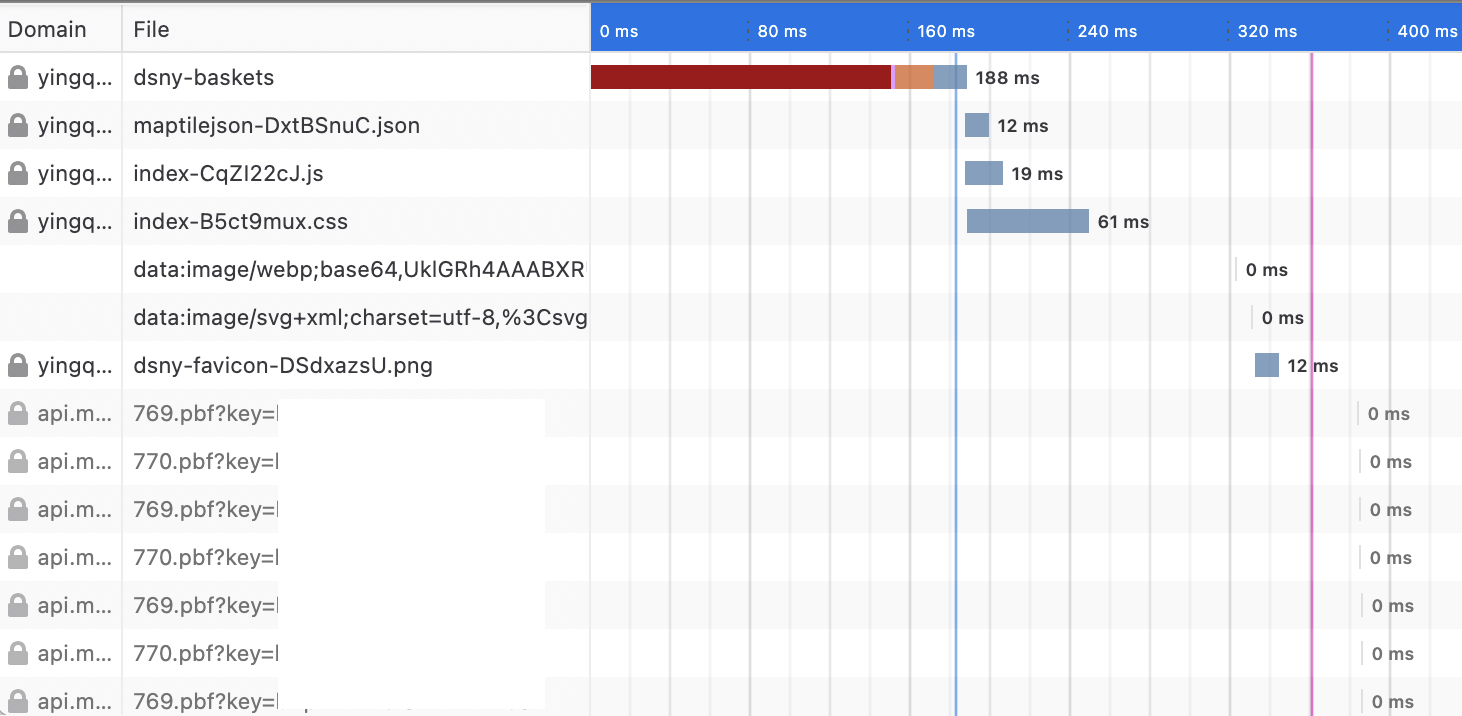

Inspecting network traffic during map load

I’m using the Maptiler API on the free trial to show vector maps. The map styles are beautiful and I

would highly recommend checking it out for mapping projects. The instructions for set up are to

create a MapLibre map with the style url pointed at something like

https://api.maptiler.com/maps/777daf37-50e3-4c3c-a645-c13a66e712e3/style.json?.... When maplibre

loads, it downloads these from maptiler. The latency for these is hard to measure, but I

consistently see that this file(and a related tiles.json) file take 100ms - 150ms to download.

Furthermore, these only load after the maplibre has has loaded, at around

500ms. The first map tiles only start downloading around 680ms, which means that the user will only

start seeing content around that time.

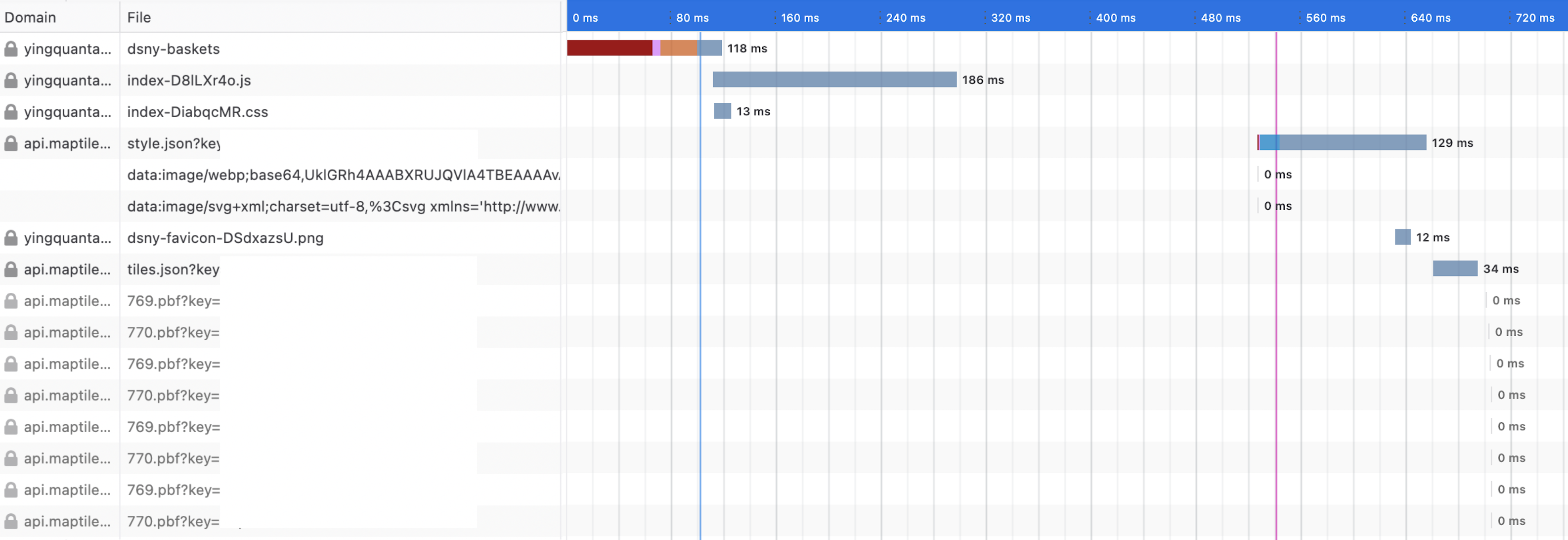

Serving style.json and tile.json assets

By inspecting the time it takes to serve my js assets, I was confident that this could be served up even quicker. For one, by bundling the style.json file as a module, I could include it just as part of the js bundle, and not have to initiate a separate fetch for it. I could not do the same for the style.json file as inlining it fails a runtime check in maplibregl.js.

I also use vite for development and publishing, and while it supposedly generate module preload directives, it doesn’t seem to do it properly. I realized that if I served the tile.json, I could then also preload the asset, so that it doesnt need to be downloaded when its used.

With this approach, the first tile fetch is now at 390ms. Without easy ways to profile the GL draw cycle on canvas, I’m not sure at actually what moment in the load cycle that the screen is filled. However, the lower bound of the load time was dramatically reduced.

Takeaways

I initially thought that reducing large asset sizes would lead to faster load times, but through profiling I found that making sure that I was serving my assets most efficiently delivered the largest time-to-first-draw gains.

-

For smallish datasets, embedding data directly into js results in a more consistent load time. In any case, all of the load times are dwarfed by proper caching implemented at a CDN layer, which is the case with my static website.

-

preloaddirectives can significantly speed up page load time. In this case, I knew I wanted to load the json files, so I could include a preload manually.

Next steps

This project includes the script as a module <script url="..." type="module">. According to

MDN, this defers the execution of the script. Between script download and kicking off the tile

download, there is about a 100ms delay. This may be script parsing, or it might be Maplibre loading.

If it is the latter, then perhaps inlining the map initialization somewhere on the page can result

in even faster load times.

Check out the latest iteration of the project here DSNY-Baskets